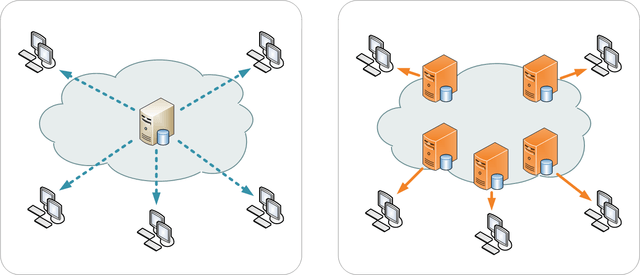

The advantage of having your website content hosted on a Content Delivery Network (CDN) is having your content distributed and stored across the Globe. Utilizing the network of the Content Delivery Network provider. Hosting your WordPress website on a Content Delivery Network is an important WordPress optimization tip. Here is how to create your own Origin Pull CDN with just a few lines of PHP...

Content Delivery Network (CDN) advantages

Spreading your content usually means faster downloads for visitors, because the content is physically closer (not to mention various distribution, caching and performance improvement techniques). Content Delivery Network providers usually have data-centers and servers on nearly all continents, so your content is too. Some CDN providers are for example: MaxCDN, CloudFront, Amazon S3 and Jet-Stream.

Origin Pull CDN (Content Delivery Network)

Here, an Origin Pull CDN means your content is pulled off your website upon a request by the CDN servers. You don't have to push or upload your content to the CDN provider. Read about the differences between Origin Pull CDNs and Pull CDNs on Push Vs. Pull: The Tale of Two CDNs.

(image credits: Kanoha)

Create your own Origin Pull CDN with PHP

Improve your website performance

For small websites with not too much traffic, a CDN is pretty cheap nowadays. Only a few dollar cents per GB traffic a month.

But it is more fun to create your own CDN alternative, isn't it? :-)

Suppose you own multiple websites with hosting packages and web space to spare. Why won't you store content from one website on the other, to offload the content and improve the speed and performance of your website? Offloaded files are downloaded in parallel.

This article is specifically for WordPress blogs and the WP-Super-Cache plugin is required!Besides utilizing WordPress and the WP-Super-Cache plugin, you can also use IIS Outbound rewrite rules.

For this to happen, we need three files:

- a

.htaccessfile on the remote side to rewrite requests and to check if files already exist - a PHP file to store the files on the remote side

- a file to delete the files when necessary

The code in this article is provided AS-IS, is not secured or production ready! But it does work. Use at your own risk.

Note: To clarify local and remote side: Local side your main website Remote side the place you put your files for offloading, e.g. the Content Delivery Network, or CDN. This can be more than one remote website

.htaccess CDN rewrites

The .htaccess file checks whether files already exist on this remote side and rewrites requests for files that are not yet stored there.

RewriteEngine On

RewriteCond %{REQUEST_FILENAME} !-f

RewriteCond %{REQUEST_FILENAME} !-d

RewriteCond %{REQUEST_URI} ^/wp-content/(.*) [NC]

RewriteRule .? /cacheme.php?url=/wp-content/%1 [L,QSA,R=301,NS]The PHP Cacheme.php mechanism and file

This is the actual Origin Pull CDN mechanism.

Requests for files that not yet exist on the remote side are sent to this cacheme.php file. It automatically creates the directory structure recursively, if it's not yet created. We use a cURL GET to verify the requested file exists on our local side, and store the file on the remote side if it does.

Modify the following PHP code to your needs:

<?php

/**

* Follow me on X/Twitter: @Jan_Reilink

*/

// Windows Server paths, as my site was hosted on Windows Server

if( !is_dir( "d:\www\example.com\www\example.org" ) ) {

mkdir( "d:\www\example.com\www\example.org", 0755 );

}

$ogfile = $_GET['url'];

$ogserverurl = "http://www.example.org/".$ogfile;

/**

* Important PHP cURL information: dont't turn off CURLOPT_SSL_VERIFYPEER

* https://www.saotn.org/dont-turn-off-curlopt_ssl_verifypeer-fix-php-configuration/

*/

$ch = curl_init($ogserverurl);

curl_setopt( $ch, CURLOPT_NOBODY, true );

curl_exec( $ch );

$retcode = curl_getinfo( $ch, CURLINFO_HTTP_CODE );

curl_close( $ch );

if( ( $retcode === 200 ) || ( $retcode === 302 ) ) {

if( !file_exists( $ogfile ) ) {

// my Windows Server website CDN path

$path = "d:\www\example.com\www\example.org\wp-content\" . dirname( $ogfile );

if( !is_dir( $path ) ) {

mkdir( $path, 0755, true );

echo "directory created";

}

$content = file_get_contents( $ogserverurl );

file_put_contents( $ogfile, $content );

}

header( "Location: $ogserverurl" );

exit();

}

?>How to delete remote content

To delete the remote content, you can use and/or modify a PHP script like this one: Recursive Directory Delete Function. Update 2018-08-01: the lixlpixel.org URL mentioned no longer hosts the required PHP code function. Here's an Wayback Machine archive.org link for you.

Recursive Directory Delete Function in PHP

<php

// ------------ lixlpixel recursive PHP functions -------------

// recursive_remove_directory( directory to delete, empty )

// expects path to directory and optional TRUE / FALSE to empty

// ------------------------------------------------------------

function recursive_remove_directory( $directory, $empty=FALSE )

{

if( substr( $directory, -1 ) == '/' )

{

$directory = substr( $directory, 0, -1 );

}

if( !file_exists( $directory ) || !is_dir( $directory ) )

{

return FALSE;

} elseif( is_readable( $directory ) )

{

$handle = opendir( $directory );

while ( FALSE !== ( $item = readdir( $handle ) ) )

{

if( $item != '.' && $item != '..' )

{

$path = $directory.'/'.$item;

if( is_dir( $path ) )

{

recursive_remove_directory( $path );

} else{

unlink( $path );

}

}

}

closedir( $handle );

if( $empty == FALSE )

{

if( !rmdir( $directory ) )

{

return FALSE;

}

}

}

return TRUE;

}

// ------------------------------------------------------------

?>In my test environment, I have a small locally hosted PHP script that fires an XMLHttpRequest (XHR) GET to the remote delete PHP script. This way I can fire multiple GET requests (and thus delete commands) asynchronously, to multiple websites in my Origin Pull CDN network using XMLHttpRequests. Just be creative :-)

Activate your Origin Pull CDN

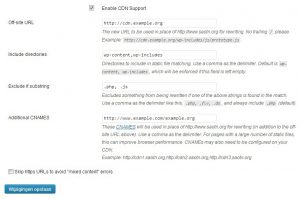

Log on to your WordPress Dashboard and go to the WP-Super-Cache settings. Under the "CDN" tab, configure your CDN URL with "Additional CNAMES":

Conclusion creating a PHP based Origin Pull CDN

In this post I showed you how to create your own Origin Pull CDN (Content Delivery Network) with just a few lines of PHP code, to boost WordPress performance.

I modified the scripts and created this post to show you what you can do to improve your website performance, but also all the fun things you can do with PHP (or any other language).

For some, creating such an Origin Pull CDN with PHP might sound stupid or useless, but it's fun to do :-)

If PHP and .htaccess files are not in your comfort zone, you can set up an Content Delivery Network (CDN) using IIS Outbound Rules. You can even create a global DNS load balancing and Varnish Cache (CDN) service on relatively cheap DigitalOcean droplets.

Thank you very much! <3 ❤️

Hi, my name is Jan. I am not a hacker, coder, developer or guru. I am merely an application manager / systems administrator, doing my daily thing at Embrace – The Human Cloud. In the past I worked at CLDIN (CLouDINfra) and Vevida. With over 20 years of experience, my specialties include Windows Server, IIS, Linux (CentOS, Debian), security, PHP, websites & optimization. I blog at https://www.saotn.org.

Thanks for your post.

But now i must use Alibaba Cloud CDN. Can you help me please?

Regard

Sorry, can’t help you with that.

Thanks for your post, but i got the error, i can not use the above code, can you help me? Thank you very much

Hello minh kien, what is the error you get? The code might need some tweaking, depending on your set up of course, and unfortunately I cannot provide support for that.

Thanks for your shares, this work fine for me..!

Thank you.

Thx for the code, it helped a lot!

There is a typo in your htaccess, you added a space just before the L. It will cause an error.

Hi Antoine, thank you for your comment. I’ve removed the space, well noticed! :)

Very nice find and post WiZZarD, using outbound rewrite rules for this purpose :) Next time mod_proxy/mod_cache?