The Baidu spider (BaiduSpider user agent) can be a real pain to block, especially since it does not respect a robots.txt as it should. This post shows you how to block BaiduSpider bot, using IIS URL Rewrite Module based on its User-Agent string.

Not all web crawlers or bots respect the robots.txt file. This post explains how you can block the BaiduSpider bot from accessing your website.

A bot is often also called a spider. Normally you would block a bot or spider using the following robots.txt:

User-agent: BaiduSpider

Disallow: /or

User-agent: *

Disallow: /But this doesn’t work for blocking Baidu spider… 🙁

You can use the following IIS URL Rewrite Module rule to block the BaiduSpider User-Agent on your website in Windows Server IIS. The only access allowed is to robots.txt, all other requests are blocked with a 403 Access Denied.

Expand the pattern= with multiple user agent strings, divided by a pipe (|), to block more bots. For example pattern="Baiduspider|Bing" or pattern="Googlebot|Bing".

Hint, search IIS URL Rewrite Module related posts on Saotn.org!

<!--

Block Baidu spider

-->

<rule name="block_BaiduSpider" stopProcessing="true">

<match url="(.*)" />

<conditions trackAllCaptures="true">

<add input="{HTTP_USER_AGENT}" pattern="Baiduspider" negate="false" ignoreCase="true" />

<add input="{URL}" pattern="^/robots\.txt" negate="true" ignoreCase="true" />

</conditions>

<action type="CustomResponse"

statusCode="403"

statusReason="Forbidden: Access is denied."

statusDescription="Access is denied!" />

</rule>Verifying the URL Rewrite rule to block Baidu

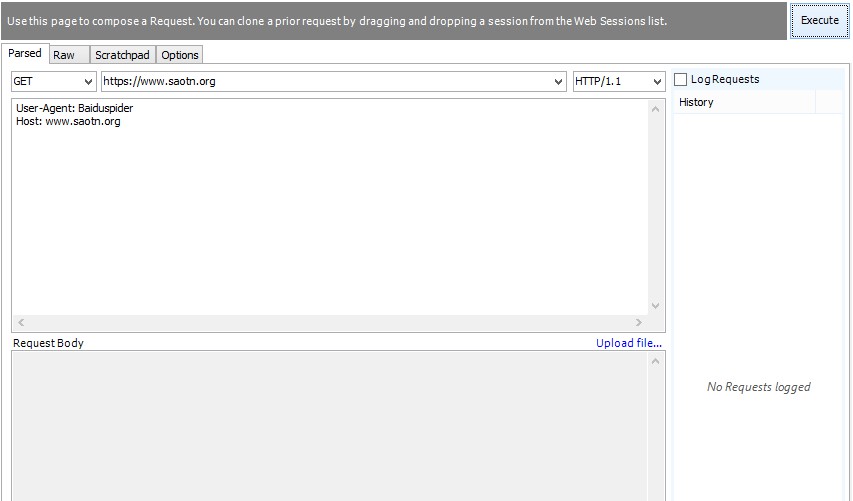

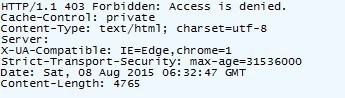

Using Fiddler‘s Composer option, to compose an HTTP request, you can easily verify the rewrite rule, as shown in the next two images.

That’s it!